Difference between Unicode and ASCII with table

We explain the difference between Unicode and ASCII with table. Unicode and ASCII are the character encoding standards used primarily in the IT industry. Unicode is the information technology standard used to encode, represent and handle text in writing systems, while ASCII (American Standard Code for the Interchange of Information) represents text in computers as symbols, digits, capital letters and lowercase.

We explain the difference between Unicode and ASCII with table. Unicode and ASCII are the character encoding standards used primarily in the IT industry. Unicode is the information technology standard used to encode, represent and handle text in writing systems, while ASCII (American Standard Code for the Interchange of Information) represents text in computers as symbols, digits, capital letters and lowercase.

They represent text for telecommunications devices and computers. ASCII encodes only multiple letters, numbers, and symbols, while Unicode encodes a large number of characters.

the difference between Unicode and ASCII is that Unicode is the standard IT representing English letters, Arabic, Greek (and many more languages), mathematical symbols, historical writings, etc., while ASCII is limited to a few characters, such as Uppercase and lowercase letters, symbols, and digits (0- 9).

Unicode can be called a superset of ASCII because it encodes more characters than ASCII. The last term generally works by converting characters to numbers because it is easier for the computer to store numbers than alphabets.

Comparison table between Unicode and ASCII (in tabular form)

Unicode ASCII comparison parameters

| Definition | Unicode is the IT standard that encodes, represents, and handles text for computers, telecommunications devices, and other equipment. | ASCII is the IT standard that encodes characters only for electronic communications. |

| Abbreviation | Unicode is also known as a universal character set. | The American Standard Code for Information Interchange is the full form of ASCII. |

| Function | Unicode represents a large number of characters such as letters from various languages, mathematical symbols, historical writings, etc. | ASCII represents a specific number of characters, such as English uppercase and lowercase letters, digits, and symbols. |

| Use | It uses 8 bits, 16 bits, or 32 bits to display any character, and ASCII is subordinate to Unicode. | Use 7 bits to present any character. It does this by converting characters to numbers. |

| Occupied space | Unicode supports a large number of characters and takes up more space. | ASCII only supports 128 characters and takes up less space. |

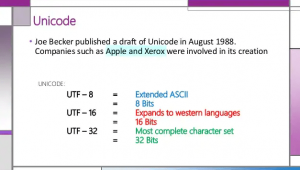

What is Unicode?

Unicode is the IT standard used to encode, represent, and handle text on computers, telecommunications devices, and other equipment. It is maintained by the Unicode Consortium and is synonymous with the Universal Character Set.

It encodes a wide range of characters such as multi-language texts (also bi-directional texts like Hebrew and Arabic that have right-to-left script), mathematical symbols, historical script, and much more.

Unicode operated three types of encodings, namely UTF-8, UTF-16 and UTF-32 which used 8 bits, 6 bits and 32 bits respectively. It has been widely used in recent technologies such as programming languages (Java, etc.) and modern operating systems.

Unicode supports a large number of characters and takes up more space on a device, which is why ASCII is part of Unicode. ASCII is valid in UTF-8 which contains 128 characters.

What is ASCII?

ASCII is the encoding standard used for encoding characters in electronic communications. It is mainly used for encoding English alphabets, lowercase letters (az), uppercase letters (AZ), symbols such as punctuation marks, and digits (0-9).

The American Standard Code for Information Interchange or ASCII encodes 128 characters predominantly in the English language used in modern computers and programming.

ASCII uses 7 bits of the data to encode any character and therefore takes up less space. ASCII was used extensively for character encoding on the World Wide Web and is still used for modern computer programs such as HTML.

ASCII encodes any text into numbers because the set of numbers is easier to store in computer memory than alphabets as a language. Generally speaking, this process itself is called encoding.

Main differences between Unicode and ASCII

- Unicode is the IT standard that encodes, represents and handles text on computers, while ASCII is the standard that encodes text (predominantly English) for electronic communications.

- Unicode is short for Universal Character Set, while ASCII stands for American Standard Code for Information Interchange.

- Both terms differ from each other in the context of the function. Unicode is the encoding standard that encodes a large number of characters such as texts and alphabets of other languages (even bidirectional texts), symbols, historical scripts while ASCII encodes the alphabets of the English language, upper and lower case, symbols, etc. .

- Unicode used 8 bits, 16 bits, or 32 bits to encode a large number of characters, while ASCII uses 7 bits to encode any character because it consists of only 128 characters.

- Unicode takes up a larger space because it is the superset of ASCII, while ASCII requires less space.

Final Thought

Unicode or the universal character set is the encoding standard that encodes, represents and handles texts for telecommunications services and other equipment, while ASCII or the American standard code for information interchange is the standard code used for the coding in electronic communication.

Unicodes covers the encoding of texts in different languages (even those with bidirectional scripts such as Hebrew and Arabic), symbols, mathematical and historical scripts, etc., while ASCII covers the character encoding of the English language that includes the uppercase letter ( AZ), lowercase letters (az), digits (0-9), and symbols such as punctuation marks.

Unicode uses three types of encoding, namely 8-bit, 16-bit, and 32-bit, while ASCII operates using 7 bits to represent any character. Therefore, Unicodes is also the superset of ASCII and takes up more space than it.